Technical Documentation

Running batch jobs

This page describes functionality that is no longer supported. For asynchronous delivery of bulk property data, please use Bulk data feeds.

Batch jobs provide an efficient way to execute bulk operations, including:

- Downloading property data for up to 1 million properties (see Property details jobs.

- Obtaining property IDs for up to 100,000 properties by address (see Match jobs).

- Obtaining property IDs for large numbers of properties that match the specified search criteria (see Search jobs).

These batch job types (property-details, match, and search) use the POST /v2/jobs/file

endpoint.

Rate limiting

Although you can submit up to 5 API requests per second, batch jobs run sequentially and you won't be able to submit another job until the first one either completes or fails. If you submit a batch job while another is in progress, the API returns HTTP status 429.

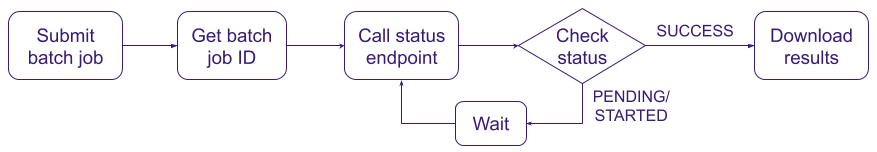

Batch job workflow

Batch jobs run asynchronously, meaning you submit your request and must wait until the operation completes before you can download the results. The steps to do this are:

Create a batch job specifying the job type (

property-detailsormatch) and the job details:Job type Job details property-detailsSpecify the type of data you want (for example, ownership) and list of property IDs (up to 1 million).matchProvide a CSV file with a list of property addresses or lat/lon coordinates (up to 100,000). Get the job ID from the initial API response.

Poll the API to determine when the job is complete.

Download the resulting data using the URL(s) returned by the API:

Job type Returns property-detailsThe property data for the requested properties. matchYour CSV file with the matching Reonomy property ID appended to each row.

Property details jobs

POST https://api.reonomy.com/v2/jobs/fileFor property details jobs ("job_type": "property-details"), you must include a job spec file specifying a list of property IDs and the type of information you're seeking (the detail_type). You can include multiple types in a single request. Valid detail types are:

basicmortgagesownershipreported_ownersalestaxestenants

Billing

Your account is billed based on property data, not API calls. If you use the batch endpoint to download data for 10,000 properties, it's equivalent to making 10,000 calls to the GET /v2/property/{property_id} endpoint. Although you could write a script to call the GET endpoint for each property ID, requests are rate limited, making it difficult to effectively download bulk data. Use of the batch API is highly recommended for any kind of bulk operations.

You can upload the job specification in two ways:

- By referencing a file on your local machine using the

filekeyword in the request (see Submitting the batch job below). - By creating a file-like object and including it in the request (see Specifying the job spec in code below).

Preparing the job spec file

When uploading the job spec as a file, the file must be a JSON file that includes:

- The type of information you're requesting (the

detail_type). You can specify multiple types. See the list of valid detail types above. - A list of Reonomy property IDs (the

property_idslist).

A sample JSON file requesting basic and ownership information for 10 properties is shown here.

You can optionally include an updated field in the JSON file, indicating that you want only properties with data updated on or after the specified date, in YYYY-MM-DD format (see the example). Any types you specify must also be in the detail_type list. If you specify multiple updated types, the API returns properties where at least one of the types was updated on or after the specified date (OR).

Additionally, you may exclude personally identifiable information (PII) from the results by including "filter_pii": true in the optional filters section of the JSON file, as shown in the example. For more information about PII and PII-related fields, see Excluding personally identifiable information (PII).

{

"detail_type": [

"basic",

"ownership"

],

"property_ids": [

"51e2200a-5160-5ab1-8f98-20a17fedbb87",

"60a041c1-06e9-5b56-9468-2d398b07de75",

"6ea9347d-5cff-59db-81ef-3713478b510f",

"e8381345-31be-5d87-b397-e3f9e72106a6",

"8cded838-df59-5d20-aef0-f1841dcbc757",

"1998456b-0a01-50b5-864e-6147f755c2a3",

"c41df528-67af-5555-8778-438c78b3bd08",

"0665d470-30e0-5f28-aee7-4a96fa2128bb",

"39af0b74-cf53-52d6-bc9b-8748890c3141",

"5eb81ab6-a086-5d78-a94a-8d2dd89f9955"

],

"updated": {

"basic": "2020-01-01",

"ownership": "2020-02-01"

},

"filters": {

"filter_pii": true

}

}Submitting the batch job

Creating the POST request

The POST /v2/jobs/file endpoint requires that your HTTP request:

- Specifies the job type as a

formobject. - Specifies the job spec as a

filesobject.

Creating a low level HTTP request for this is tricky, so use of an HTTP client library is highly recommended (see the sample code). If you're using Node.js, see Using Node.js below for additional information.

Important: Do not specify a Content-Type header when calling this endpoint. Client HTTP libraries will include the appropriate Content-Type header for you. If you receive an HTTP 400 FileNotFoundAtPathError, it's likely your POST request is not formatted as required by the API. See Troubleshooting below for more information.

The example shows how to specify a JSON file, but you can select your own JSON file below. If you've entered your API access token, you can then use the ► RUN button to run your request against the API and see the response.

Response

If the POST request is successful, the API returns HTTP status 201 ("Created") along with details of the batch job. Within the response, the id is the job ID you'll use to check on the job status. The job status is always null initially.

- If you run the query and get an

AuthorizationException, use the Access Token button in the top navigation bar to set your API access token. - If you get a

FileNotFoundAtPathError, make sure you selected a local CSV file using the File button above. - If you receive an HTTP 400

FileNotFoundAtPathError, it's likely yourPOSTrequest is not formatted as required by the API. See Troubleshooting below for more information.

Specifying the job spec in code

It's also possible to create a file-like object with the job specification and upload that with the batch job request. This can be useful if, for example, you used POST /search/summaries to generate a list of properties. The implementation is language specific but the example shows how you might do this in Python.

# Create list of IDs from POST /search/summaries response

property_ids = [item["id"] for item in response["items"]]

payload = json.dumps({

"detail_type": ["basic", "ownership"],

"property_ids": property_ids

})

file_obj = io.StringIO(payload)

file_obj.name = "payload.json"

files = [

("file", file_obj)

]

data = {

"job_type": "property-details"

}

response = requests.post(url, data=data, files=files, headers=headers).json()

Checking the job status

GET https://api.reonomy.com/v2/jobs/file/{job_id}

GET https://api.reonomy.com/v2/jobs/file

Your code should check the job status periodically (for example, once a second) to know when the job is complete. To do this, use the GET /v2/jobs/file/{job_id} endpoint with the job ID returned when you created the batch job, or use GET /v2/jobs/file specifying limit: 1 to get the most recent job.

Response

GET /v2/jobs/file/{job_id} returns a JSON response with a structure like the initial batch API response. The field you need to check is the status field.

When you create a batch job, the API queues the job and sets the status to PENDING. The status changes to STARTED when the job starts, and SUCCESS when the job completes successfully. The response also includes result_url and result_urls fields you can use to download the results (see Viewing the results below).

The time for a batch job to complete varies depending on the availability of server-side resources and the size of the batch job, but typically ranges from a few seconds for small jobs to a few minutes for very large jobs.

If you run the query and get an AuthorizationException, use the Access Token button in the top navigation bar to set your API access token.

Viewing the results

When a job completes with status SUCCESS, the GET /v2/job/file/{job_id} response includes result_url and result_urls attributes (shown in the example) specifying the URL(s) you can use to download the resulting property data as a file. If you submit more than 25,000 property IDs, the API returns the results in batches of 25,000:

result_urlis a comma-separated string of result URLs.result_urlsis an array of result URLs.

The file type returned by each result URL is NDJSON. Within the file, each property is represented as a separate JSON object, with a new line (\n) character between each object. Large scale data analysis tools like Spark and BigQuery are optimized for NDJSON and can read files incrementally, which is important since the download size can be very large.

Result URLs are valid only for 10 minutes after the job completes, so be sure to download the results as soon as you see 'status': 'SUCCESS'. If you don't, you'll get an error page indicating that The specified key does not exist. If this happens, call GET /v2/jobs/file/{job_id} again to get a new URL.

{

"created": "2020-01-03T16:34:52.104383+00:00",

"group_path": "a36a93decf664f9d8bddfefd2bb4fb57",

"id": "fe9f0c8f-717d-48f7-9974-50430094236c",

"job_type": "property-details",

"message": null,

"metadata": {

"headers": [],

"labels": []

},

"modified": "2020-01-03T16:35:09.550790+00:00",

"progress": null,

"result_url": "https://reonomy-viserion-prd.s3.amazonaws.com/job_cache/afcc88d7-58d0-44a5-9894-5243bf111ba8_0.ndjson?AWSAccessKeyId=ASIA...&Signature=9msvmgn0Oott8Tbx71bdhK8YL%2Bc%3D&x-amz-security-token=IQoJb3JpZ2luX2VjEPb%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEaCXVzLWVhc3QtMSJIMEYCIQDNyVqvIYzTO0bSgkyc1y9MxXkAxlbBRfWYBIDVh4rNMgIhALv%2BYY1E49%2FPUuVZaM82au0IvruOrSNUUw35jiNynz8dKvcDCN7%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEQABoMNjUyMTk3NzIxNzI4IgyC9MmtYmmjD9B9XNYqywOqxN4xnHMRqXR2zElWzw7m%2B5L8rGr1B%2FeFFI6bhL0IWfj2vIPzGfTocpfX2Xqh57zRIyItDg8QD0v%2BymcmsdlbYilLEqRGmDf5kW0gbDWETZx4Yadd2QMTw9%2BlP69djBR85mYOZzmMrrIrI04YvdVn99lR6shas64SQxDUIWlaE0gViTGOjSQhrvN4Mh3cqZCX9pNsx8qEKZ7CgMTxgqlYIEGOyjXFXqPs%2BNl5yugIiu%2BzfeGmQB9XaccK6jvqBQR5lxTTKpJ14FGQ3OvuvsBGPf0k8BnrLIzZXsJIvQUmXuIO0XMDkNnxr2uRCp73Fq9bKVhlUb9JaTIOYFA3yf41%2Bz4VotvWFsbmBLRCOtzAWuSg2xlu2pRiM1Pvefdzn469Aeh3AqWw1%2Fp1pXGsvVtNhOJvJ8BDUSi%2F35HDIRxrGumKw6ke5u2wmn76q3AQcbbJZDbbjeymSo%2BxlC3ZnopFwrUJ2Dtez6%2FfydinARyCNIiruK1w9bIciKLjk9oAbgHf%2FwyBGTqiKmHweUcrU2A75M4WyXUZ%2BChhLe28H%2B6GEUJsnnRj71yaxAT9bU8px%2FasOQPCyBN8Zs02gPAfAooghmA4fS6gYtY9zrUwhcTP8wU67gFw%2F8eQ9VtLkxNVtYO1D7OJO%2FlZooEwISVA8fWfEUJCE5H0qWVo5xy7Zg77gBZeSIbcEJiHxXB3V4%2B3X41syYEEk6vYXXiEQNNa0l5Oz%2BPR0zhiPbBpAVT%2Bb49gWJzc6sJafG%2FKDRevUcGg2oUnOgC8nr9xeVnT%2BApd0xrMaF8%2B6vhUWjKBXZj8ZjYr0166Ie45Ab%2BkEEIfV3FcZyHg38tdVVOAPFNa9kzU0it2lj7NkxpYw1YkF0ky7qKRKUvr%2BZpFZVR4FJEbOk7t8i0usBx9nTZ4J0w%2BiangVi9aTUVhS9jlpAoK7tAiIKX42fUH&Expires=1584656880",

"result_urls": [

"https://reonomy-viserion-prd.s3.amazonaws.com/job_cache/afcc88d7-58d0-44a5-9894-5243bf111ba8_0.ndjson?AWSAccessKeyId=ASIA...&Signature=9msvmgn0Oott8Tbx71bdhK8YL%2Bc%3D&x-amz-security-token=IQoJb3JpZ2luX2VjEPb%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEaCXVzLWVhc3QtMSJIMEYCIQDNyVqvIYzTO0bSgkyc1y9MxXkAxlbBRfWYBIDVh4rNMgIhALv%2BYY1E49%2FPUuVZaM82au0IvruOrSNUUw35jiNynz8dKvcDCN7%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEQABoMNjUyMTk3NzIxNzI4IgyC9MmtYmmjD9B9XNYqywOqxN4xnHMRqXR2zElWzw7m%2B5L8rGr1B%2FeFFI6bhL0IWfj2vIPzGfTocpfX2Xqh57zRIyItDg8QD0v%2BymcmsdlbYilLEqRGmDf5kW0gbDWETZx4Yadd2QMTw9%2BlP69djBR85mYOZzmMrrIrI04YvdVn99lR6shas64SQxDUIWlaE0gViTGOjSQhrvN4Mh3cqZCX9pNsx8qEKZ7CgMTxgqlYIEGOyjXFXqPs%2BNl5yugIiu%2BzfeGmQB9XaccK6jvqBQR5lxTTKpJ14FGQ3OvuvsBGPf0k8BnrLIzZXsJIvQUmXuIO0XMDkNnxr2uRCp73Fq9bKVhlUb9JaTIOYFA3yf41%2Bz4VotvWFsbmBLRCOtzAWuSg2xlu2pRiM1Pvefdzn469Aeh3AqWw1%2Fp1pXGsvVtNhOJvJ8BDUSi%2F35HDIRxrGumKw6ke5u2wmn76q3AQcbbJZDbbjeymSo%2BxlC3ZnopFwrUJ2Dtez6%2FfydinARyCNIiruK1w9bIciKLjk9oAbgHf%2FwyBGTqiKmHweUcrU2A75M4WyXUZ%2BChhLe28H%2B6GEUJsnnRj71yaxAT9bU8px%2FasOQPCyBN8Zs02gPAfAooghmA4fS6gYtY9zrUwhcTP8wU67gFw%2F8eQ9VtLkxNVtYO1D7OJO%2FlZooEwISVA8fWfEUJCE5H0qWVo5xy7Zg77gBZeSIbcEJiHxXB3V4%2B3X41syYEEk6vYXXiEQNNa0l5Oz%2BPR0zhiPbBpAVT%2Bb49gWJzc6sJafG%2FKDRevUcGg2oUnOgC8nr9xeVnT%2BApd0xrMaF8%2B6vhUWjKBXZj8ZjYr0166Ie45Ab%2BkEEIfV3FcZyHg38tdVVOAPFNa9kzU0it2lj7NkxpYw1YkF0ky7qKRKUvr%2BZpFZVR4FJEbOk7t8i0usBx9nTZ4J0w%2BiangVi9aTUVhS9jlpAoK7tAiIKX42fUH&Expires=1584656880"

],

"status": "SUCCESS",

"user": "654e9cb7-db66-40cc-8538-c88cebbfa935"

}NDJSON object structure

As noted above, each result URL returns an NDJSON file. Within a file, each property is represented as a separate JSON object, and a new line (\n) character separates each object.

The example on the right shows an NDJSON file with two properties that's been converted to an array of JSON objects. If you expand one of the top level nodes, you can see the structure of the JSON object for the property. In this case, we requested all data types (basic, mortgages, ownership, etc.) in the job spec file, so all the available Reonomy property data is included.

Within the property object, the order of items is arbitrary. If you scroll through the object, you'll see a node for each requested data type, except that basic attributes (active, municipality, etc.) are displayed at the top level, not under a basic node. Additionally, the ownership contact information is listed under its own contacts node.

Although an NDJSON file includes valid JSON objects, the file itself isn't valid JSON, so you can't read it using a standard JSON reader. However, for relatively small files, you can read the file line-by-line and process each line using a standard JSON reader. The example shows how you can convert an NDJSON file to a standard JSON list using Python.

import json

with open('./results.ndjson') as ndjson:

results = [json.loads(line) for line in ndjson]

Troubleshooting

If you receive an HTTP 400 FileNotFoundAtPathError, it's likely your POST request is not formatted as required by the API.

To determine what's wrong with the request, you might find it helpful to send the POST request to https://httpbin.org/post to see how the client library you're using is constructing the HTTP request. httpbin.org is a service that returns the request to you so you can see exactly how it was formatted by your client library. This can help identify requests that are improperly formatted or libraries that are incompatible. An example of a good response from https://httpbin.org/post is shown here.

Using Node.js

Some Node.js HTTP libraries (for example, node-fetch and axios) do not natively support multipart/form-data streams and instead recommend using the form-data package to submit forms and file uploads. However, form-data creates objects that are incompatible with the Reonomy API and you may receive an HTTP 400 FileNotFoundAtPathError error. Additionally, see https://github.com/axios/axios/issues/789 for information about axios incompatibilities.

Node.js request, request-promise, and request-promise-native all support multipart/form-data streams and work with the Reonomy API. However, they do not by default include a User-Agent header, which is required by the Reonomy API, so be sure to set this manually, as shown in the examples.

{

"args": {},

"data": "",

"files": {

"file": "{'detail_type': ['basic'],'property_ids': ['1372eae1-228e-5901-9eb6-919cf676731c'],'updated': '2018-01-01'}"

},

"form": {

"job_type": "property-details"

},

"headers": {

"Authorization": "Basic ABCD1234",

"Content-Length": "462",

"Content-Type": "multipart/form-data; boundary=--0546582662",

"Host": "httpbin.org",

"User-Agent": "node"

},

"json": null,

"origin": "174.44.104.54, 174.44.104.54",

"url": "https://httpbin.org/post"

}Match jobs

POST https://api.reonomy.com/v2/jobs/fileA match job ("job_type": "match") lets you upload a CSV file with property addresses or lat/lon coordinates (maximum 100,000) and returns a CSV file with the Reonomy property ID inserted into each row.

| Address1 | City | State | Zip |

|---|---|---|---|

| 767 3rd Ave | New York | NY | 10017 |

| 20 W 34th St | New York | NY | 10001 |

| reonomy_id | status | miss_reason | Address1 | City | State | Zip |

|---|---|---|---|---|---|---|

| 19cd94b6-0b51-543d-bfc1-3ed3205d13ae | OK | 767 3rd Ave | New York | NY | 10017 | |

| 66258e65-0022-5af5-831e-22443f6dd854 | OK | 20 W 34th St | New York | NY | 10001 |

Preparing the CSV file

For addresses, the CSV file you upload must have at a minimum four columns:

- One for the street address

- One for the city name

- One for the state abbreviation

- One for the zip code

Address1,City,State,Zip

767 3rd Ave,New York,NY,10017

20 W 34th St,New York,NY,10001

For location coordinates, the CSV file must have at least the following columns:

- One for the latitude value

- One for the longitude value

You can create the CSV file using a standard text editor as shown, but more typically you'll export data as a CSV file from a database or an application like Excel. Make sure you include a header row with the column names.

Latitude,Longitude

40.7541851,-73.9735753

40.748562,-73.9879465

The CSV file can have as many additional columns as you want. However, if you have additional columns in your CSV file, you must include them all in the headers list when you construct the API request (see below).

FirstName,LastName,PhoneNumber,Address1,City,State,Zip,Country

John,Doe,212-555-1212,767 3rd Ave,New York,NY,10017,USA

Jane,Doe,212-555-1213,20 W 34th St,New York,NY,10001,USA

Submitting the match job

Mapping the columns

When you upload a match job, you must tell the API how your CSV file column headers map to the required API parameter names. The first table shows the four required address parameters and how they map to the column names used in the address examples above. The second table shows the required location parameters and how they map to the column names used in the location example above.

You define the mappings in a metadata object you submit with your POST request. The metadata object must include the following attributes:

headersspecifies the names of all the columns in your CSV file, in the correct order.mappingspecifies the required parameters and the columns they map to in your CSV file.

See the sample code below for an example of the required format.

| API parameter name | CSV header |

|---|---|

| address_line_1 | Address1 |

| address_city | City |

| address_state | State |

| address_postal | Zip |

| API parameter name | CSV header |

|---|---|

| latitude | Latitude |

| longitude | Longitude |

Creating the POST request

The POST /v2/jobs/file endpoint requires that you:

- Specify the job type and the job metadata as a

formobject. - Specify the job details file as a

filesobject.

Creating a low level HTTP request for this is tricky, so use of an HTTP client library is highly recommended (see the sample code). If you're using Node.js, see Using Node.js above for additional information.

Important: Do not specify a Content-Type header when calling this endpoint. Client HTTP libraries will include the appropriate Content-Type header for you. If you receive an HTTP 400 FileNotFoundAtPathError, it's likely your POST request is not formatted as required by the API. See Troubleshooting below for more information.

The prefilled example shows how you'd map the columns for the example CSV above, but you can select your own CSV file below and then map the columns yourself. If you've entered your API access token, you can use the ► RUN button to run your request against the Reonomy API and see the response.

Response

The response includes the job status, which is always null initially.

If you run the query and get an AuthorizationException, use the Access Token button in the top navigation bar to set your API access token. If you get a FileNotFoundAtPathError, make sure you selected a local CSV file using the File button above.

Checking the job status

GET https://api.reonomy.com/v2/jobs/file/{job_id}

GET https://api.reonomy.com/v2/jobs/file

Your code should check the job status periodically (for example, once a second) to know when the job is complete. To do this, use the GET /v2/jobs/file/{job_id} endpoint with the job ID returned when you created the batch job, or use GET /v2/jobs/file specifying limit: 1 to get the most recent job.

If the request to POST /v2/jobs/file is successful, the API returns HTTP status 201 ("Created") along with details of the batch job. Within the response, the id is the job ID.

The status in the response from the initial POST request is always null. When the API queues the job, its status becomes PENDING. When the job starts, the status is STARTED, and when it completes successfully the status is SUCCESS.

When the batch job completes, the API returns 'status': 'SUCCESS'. The response also includes a result_url you can use to download the results (see Viewing the results below).

Viewing the results

When a job completes with status SUCCESS, the GET /v2/job/file/{job_id} response includes a result_url attribute (shown in the example) specifying the URL you can use to download the results as a CSV file.

The results file includes everything in the original CSV file, plus the following columns:

| Column | Description |

|---|---|

reonomy_id | The matching property ID (if match was successful). |

status | The match resolution status: |

OK: A commercial property was found at the specified address or location. | |

MISS: No matching commercial property found. See miss_reason for additional information. | |

PARTIAL (for address search only): The supplied address was ambiguous. The returned property ID represents the best match available. | |

miss_reason | Additional information (if no match found): |

SFR: The property at this address or location is categorized as a single family residence (SFR), therefore no data is available. | |

Condo Property (SFR): The property at this address or location is categorized as a residential condominium, therefore no data is available. | |

MISSING: No known property at the supplied address or location. | |

Unrecognized address: Unable to match the supplied address or location for a reason other than those specified above. |

The example below shows the resulting CSV file for an upload CSV file that included additional columns.

{}| reonomy_id | status | miss_reason | FirstName | LastName | PhoneNumber | Address1 | City | State | Zip | Country |

|---|---|---|---|---|---|---|---|---|---|---|

| 19cd94b6-0b51-543d-bfc1-3ed3205d13ae | OK | John | Doe | 212-555-1212 | 767 3rd Ave | New York | NY | 10017 | USA | |

| 66258e65-0022-5af5-831e-22443f6dd854 | OK | Jane | Doe | 212-555-1213 | 20 W 34th St | New York | NY | 10001 | USA |

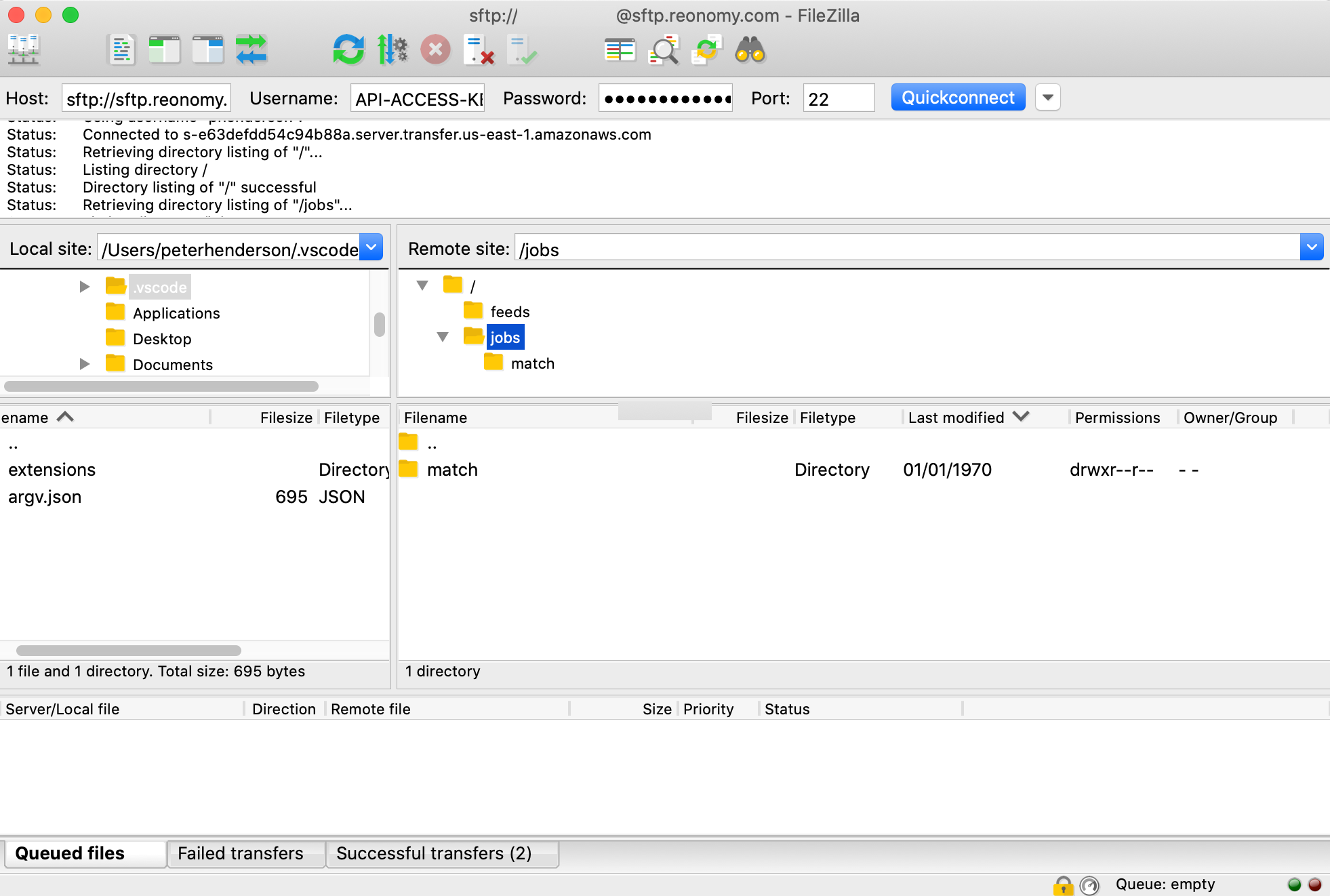

Accessing the results via SFTP

Match job output files are delivered to a location you can access via SFTP using your API access key and secret.

You can use any SFTP client to access the files:

- Connect to

sftp://sftp.reonomy.comon port 22 using your API access key as the username and your secret key as the password. - On the remote site, open the

jobs/matchfolder. You'll see your match job output files listed. - Download the file you want. Files are named using the batch job ID.

Troubleshooting

If you receive an HTTP 400 FileNotFoundAtPathError, it's likely your POST request is not formatted as required by the API. To determine what's wrong with the request, you might find it helpful to send the POST request to https://httpbin.org/post to see how the client library you're using is constructing the HTTP request.

Looking at the response from httpbin.org can help identify requests that are improperly formatted or libraries that are incompatible. An example of a good response from httpbin.org is shown here.

Using Node.js

See Using Node.js in the previous section for information about using Node.js HTTP libraries.

{

"args": {},

"data": "",

"files": {

"file": "Address1,City,State,Zip\n767 3rd Ave,New York,NY,10017\n20 W 34th St,New York,NY,10001"

},

"form": {

"job_type": "match",

"metadata": "{'headers':['Address1','City','State','Zip'],'mapping':{'address_line_1':'Address1','address_city':'City','address_state':'State','address_postal':'Zip'}}"

},

"headers": {

"Authorization": "Basic ABCD1234",

"Content-Length": "666",

"Content-Type": "multipart/form-data; boundary=--84191438266",

"Host": "httpbin.org",

"User-Agent": "node"

},

"json": null,

"origin": "174.44.104.54, 174.44.104.54",

"url": "https://httpbin.org/post"

}Search jobs

POST https://api.reonomy.com/v2/jobs/fileRequests to POST /search/summaries return a maximum of 10,000 property IDs in the initial response. If the search yields more properties than the limit, the API returns a search_token you can use to request additional matching items. This requires re-running the request, possibly multiple times, with the search_token as a query parameter (see Pagination).

As an alternative, you can run a bulk search job, (a batch job with "job_type": "search"), where you upload a JSON file with the search criteria (see below).

As with the other batch job types, the search job runs asynchronously, so you must poll the GET /jobs/file/{job_id} endpoint to determine when it is finished. When the job completes, GET /jobs/file/{job_id} returns a results_url you can use to download the results.

A batch search job is an alternative to POST /v2/search/summaries for obtaining property IDs. Use it when you expect the number of properties meeting the search criteria to be large.

Preparing the search file

The search file you upload with the POST request is a JSON file that specifies the search criteria, as shown in the example. The format is exactly the same as that used by the POST /v2/search/summaries request body.

One way to create the required JSON is to use the POST /search/summaries page to build your search interactively, then copy the request body into a file and save it with a .json extension.

{

"settings": {

"city": [

"New York"

],

"state": [

"NY"

],

"building_area": {

"min": 100000

}

}

}Running the search job

You submit the search job by making a POST request to /v2/jobs/file, specifying the job type as a form object and the JSON file as a files object, as shown in the example. For information about creating an HTTP request to do this, see Creating the POST request in the "Property details jobs" section.

Response

If the POST request is successful, the API returns HTTP status 201 ("Created") along with details of the batch job. Within the response, the id is the job ID you'll use to check on the job status.

The initial job status is always null. To check the current job status, use the GET /v2/jobs/file/{job_id} endpoint with the job ID, or use GET /v2/jobs/file specifying limit: 1 to get the most recent job.

The status changes to STARTED when the job starts, and SUCCESS when the job completes successfully. The response also includes result_url and result_urls fields you can use to download the results (see Viewing the results below).

Viewing the results

When a job completes with status SUCCESS, the GET /v2/job/file/{job_id} response includes result_url and result_urls attributes (shown in the example) specifying the URL(s) you can use to download the resulting property data as a file:

result_urlis a string with the result URL.result_urlsis a single element array with the result URL.

Result URLs are valid only for 10 minutes after the job completes, so be sure to download the results as soon as you see 'status': 'SUCCESS'. If you don't, you'll get an error page indicating that The specified key does not exist. If this happens, call GET /v2/jobs/file/{job_id} again to get a new URL.

{

"created": "2020-06-10T20:32:40.766389+00:00",

"group_path": "a36a93decf664f9d8bddfefd2bb4fb57",

"id": "48b9228d-a37a-44c1-b784-74478c1e3d47",

"job_type": "search",

"message": null,

"metadata": {

"headers": [],

"labels": []

},

"modified": "2020-06-10T20:32:55.853270+00:00",

"progress": null,

"result_url": "https://reonomy-viserion-prd.s3.amazonaws.com/job_results/d97e773b-d7cf-4483-81e3-7854378f7719.ndjson?AWSAccessKeyId=ASIA...&Signature=gDHagHpEjgFyvqJdwYeMT4vsLPg%3D&x-amz-security-token=IQoJb3JpZ2luX2VjEM7%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEaCXVzLWVhc3QtMSJHMEUCIBTiTouH1RHi4eb0%2F4nRH2g9D4hQmqw6uyAC5lEOzWYqAiEA4as4Snhmsv2evC2E8RMCBEWcjoCB0Gg9zFwU7lkn7D0q7gMIRxAAGgw2NTIxOTc3MjE3MjgiDIY33uhJK7Nq3LeafSrLA3MVSxNXmlcl%2FaXDe9hD9ofKwiO2kdva5nGAh1FUAGGrvXkg8OC4xWbRKO3qSBh7cOHyq%2B7QCVzvztueWfEIUTPUwaP%2FpRBZH0hLA4R3l7Fa%2FWTlDpWGMdtjXInuBqYzMK%2BXGO5TzIlzAXb68qaxOVdlqYUtRi1GJegDHpsNnfWECjZGZ4LO1Bqt%2BhIlHq8PGQylvk2B0lks%2Fiut5Yg3fFswnDMzqil78MnpiqgFvEcQbgbsmxNuT%2BjDRcOYIwW0rNxzRU1mJuSHucpA6VrphHYT%2FdnGhGC7DZLeLNU%2B0CKgV6j%2F5lArktBsppzC3fgRCQTbqG3r3lNIeoPZ6GmNnFM4Kf4si3feFwV7Wd4VKxQG7%2Bs078RB10%2BlYXmwUl6QY1Ldoq5I0cD6JThOPQhh%2BeU0XUBon3IKndYjqhUHV7uiJ46QBw61C1CUiSmSbC1xApgRFH2Cr%2FpAFEiU%2FhH9l192h4JE%2Ft%2BVHBi%2BFsvbwY4gMrCMvzPyDUsZTgzpi8b0jMUByHZVFnRuEDpJeprmliNcHcuIoKYhLbmk6cixHgcD8sVY1FtpYYV6hepanN26r73nilj64raoDUdTwKLyJTOkAz4jKXLCSh2TCDCC8Yj3BTrvAU6lZt2Fb0tQVkJ5FqftJD3gL4mrCqqLzj0AHGWjFYmad8HeW8A%2FMmnAfXuVCziSzg%2BpsaxFH%2BoSbhNudtteSife%2B3KPvP8r1us%2BFhxCb0JksAk2NyJXs%2BE%2FRSKvpiy77CAq4frWPmDvDbUBX4KcNWHNdurAwO%2FlciDX23DqEJ875%2Fke25K8PRyYbA9pn7LF25EQ0Q9gTGF2R84PdekwY3nKy6bjDstmoGAerezCYkn19T9ca1%2FIgFonErf0Xt%2FLK1y1lBVb%2B%2FLU4CL%2BrhKymXl6f9es83Xz1ugbfKwiF5u1zBlVabJdEsxLTrxJstoU&Expires=1591889988",

"result_urls": [

"https://reonomy-viserion-prd.s3.amazonaws.com/job_results/d97e773b-d7cf-4483-81e3-7854378f7719.ndjson?AWSAccessKeyId=ASIA...&Signature=gDHagHpEjgFyvqJdwYeMT4vsLPg%3D&x-amz-security-token=IQoJb3JpZ2luX2VjEM7%2F%2F%2F%2F%2F%2F%2F%2F%2F%2FwEaCXVzLWVhc3QtMSJHMEUCIBTiTouH1RHi4eb0%2F4nRH2g9D4hQmqw6uyAC5lEOzWYqAiEA4as4Snhmsv2evC2E8RMCBEWcjoCB0Gg9zFwU7lkn7D0q7gMIRxAAGgw2NTIxOTc3MjE3MjgiDIY33uhJK7Nq3LeafSrLA3MVSxNXmlcl%2FaXDe9hD9ofKwiO2kdva5nGAh1FUAGGrvXkg8OC4xWbRKO3qSBh7cOHyq%2B7QCVzvztueWfEIUTPUwaP%2FpRBZH0hLA4R3l7Fa%2FWTlDpWGMdtjXInuBqYzMK%2BXGO5TzIlzAXb68qaxOVdlqYUtRi1GJegDHpsNnfWECjZGZ4LO1Bqt%2BhIlHq8PGQylvk2B0lks%2Fiut5Yg3fFswnDMzqil78MnpiqgFvEcQbgbsmxNuT%2BjDRcOYIwW0rNxzRU1mJuSHucpA6VrphHYT%2FdnGhGC7DZLeLNU%2B0CKgV6j%2F5lArktBsppzC3fgRCQTbqG3r3lNIeoPZ6GmNnFM4Kf4si3feFwV7Wd4VKxQG7%2Bs078RB10%2BlYXmwUl6QY1Ldoq5I0cD6JThOPQhh%2BeU0XUBon3IKndYjqhUHV7uiJ46QBw61C1CUiSmSbC1xApgRFH2Cr%2FpAFEiU%2FhH9l192h4JE%2Ft%2BVHBi%2BFsvbwY4gMrCMvzPyDUsZTgzpi8b0jMUByHZVFnRuEDpJeprmliNcHcuIoKYhLbmk6cixHgcD8sVY1FtpYYV6hepanN26r73nilj64raoDUdTwKLyJTOkAz4jKXLCSh2TCDCC8Yj3BTrvAU6lZt2Fb0tQVkJ5FqftJD3gL4mrCqqLzj0AHGWjFYmad8HeW8A%2FMmnAfXuVCziSzg%2BpsaxFH%2BoSbhNudtteSife%2B3KPvP8r1us%2BFhxCb0JksAk2NyJXs%2BE%2FRSKvpiy77CAq4frWPmDvDbUBX4KcNWHNdurAwO%2FlciDX23DqEJ875%2Fke25K8PRyYbA9pn7LF25EQ0Q9gTGF2R84PdekwY3nKy6bjDstmoGAerezCYkn19T9ca1%2FIgFonErf0Xt%2FLK1y1lBVb%2B%2FLU4CL%2BrhKymXl6f9es83Xz1ugbfKwiF5u1zBlVabJdEsxLTrxJstoU&Expires=1591889988"

],

"status": "SUCCESS",

"user": "654e9cb7-db66-40cc-8538-c88cebbfa935"

}The file type returned by the result URL is NDJSON. Within the file, each property is represented as a separate JSON object, with a new line (\n) character between each object.

The example on the right shows a JSON object representing a single property from the results. It provides all of the data update times for the property, as well as the property ID (id).

{

"sale_update_time": "2020-05-15",

"shape_update_time": "2020-04-16",

"tax_update_time": "2020-04-16",

"building_update_time": "2020-04-16",

"master_update_time": "2020-05-15",

"mtg_update_time": "2020-04-16",

"id": "f5736c8b-2802-52b5-94a6-7e5283c9b084",

"owner_update_time": "2020-04-16"

}Although an NDJSON file includes valid JSON objects, the file itself isn't valid JSON, so you can't read it using a standard JSON reader. However, if the file is not too large, you can read it line-by-line and process each line using a standard JSON reader. The example shows how you can convert an NDJSON file to a standard JSON list using Python.

import json

with open('./results.ndjson') as ndjson:

results = [json.loads(line) for line in ndjson]

Viewing active batch jobs

GET https://api.reonomy.com/v2/jobs/file

This endpoint lets you check that status of all batch jobs associated with your access token.

Response

GET https://api.reonomy.com/v2/jobs/file returns a count of active jobs associated with your access token and an items list with the information about each job.

Keep in mind that result_urls are only valid for 10 minutes, so it's likely many of the result_url URLs will return <Message>Request has expired</Message>. If that happens, use the job ID (id) and GET /v2/jobs/file/{job_id} to get a new URL.